Nice OpenEdge DB charts with Docker + InfluxDB + Grafana

As part of a migration project, I wanted to be able to monitor a few database statistics in a quick way, but also be able to provide nice and useful charts without having to rely on a huge analytics layer. A quick Google search later (oh, that wasn't so quick as I lost a lot of time reading various blogs and websites completely unrelated to the topic !), Grafana was the tool I was looking for (at least on the paper^Hscreen). As advertised on their website, Grafana is "The leading graph and dashboard builder for visualizing time series metrics". I couldn't be wrong...

Step 0: install Docker

Docker is a kind of lightweight virtualization technology, where you can run multiple applications independently in containers, without having to virtualize the entire operating system. Looking for a new Tomcat instance ? Just install Docker from your favorite package manager ('yum install docker' for example), then execute the image with 'docker run -p 8888:8080 tomcat:8.0'. In a few seconds, you'll have a new Tomcat 8 container running, and you'll be able to access http://localhost:8888. Need another one ? Execute 'docker run -p 8889:8080 tomcat:8.0'. A few seconds later, another Tomcat 8 instance will be up and running, accessible from http://localhost:8889.

So why Docker here ? InfluxDB and Grafana are based on languages I'm not used to, and I didn't want to spend time pushing various knobs and triggers to make them work. By using pre-configured images, I knew it would work out of the box, and I would just have to redirect a few ports

Step 1: install InfluxDB

If you're an OpenEdge Management user, you probably know that the trend database stores metrics. Same story here, we'll have to store a lot of metrics, but why bother installing a full RDBMS system ? While I find OpenEdge extremely powerful, there's no one size fits all. And when it comes to time-series data, InfluxDB is extremely powerful !

Using Docker, starting a new InfluxDB instance is :

docker run -d -p 8083:8083 -p 8086:8086 --name influxdb tutum/influxdb

Now, just verify that you can connect to http://localhost:8083, and create a new database with 'CREATE DATABASE foo'

Step 2: install Grafana

Grafana is the Web layer which fetch data from InfluxDB, and display them as gorgeous charts

Using Docker, it's still easy:

docker run -d -p 3000:3000 --link influxdb:influxdb --name grafana grafana/grafana

The --link switch let Docker know that those two containers will be able to see each other. You can now verify that you can connect to http://localhost:3000

Step 3: collect OpenEdge metrics

George Potemkin published some time ago a set of procedures to collect metrics from OpenEdge databases. With a few lines of code, I've been able to change the output (the OpenEdge EXPORT statement) to something which can be read by InfluxDB.

You can find those updated procedures on GitHub

If you know how to start an OpenEdge session, it won't be difficult the collect metrics. Just define the PROPATH variable to point at the proceures, execute _progres connected to the set of DB you want to monitor, and execute DbStatDump.p with '-param /path/to/snapshot.d'. This file will store a snapshot of the latest values, so that the next execution will compute the difference. In short, if you trigger the statistics every five minutes, the generated metrics will only show what happened during the last five minutes.

The working directory will now contain a set of *.txt files you'll have to send to InfluxDB. This part is extremely easy as InfluxDB can be fed using a REST interface ; in my case, I'm just using curl this way :

find /path/to/stats -name "*.txt" -print | while read filename ; do /usr/local/bin/curl -X POST -i --data-binary @${filename} "http://localhost:8086/write?db=foo" && rm ${filename} ; doneStep 4: enjoy nice charts !

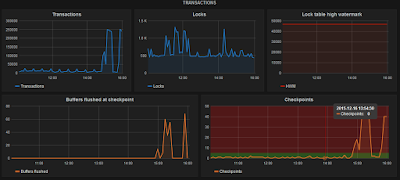

Once data are flowing in the DB, you can starting designing charts, and that's the really easy part. An example for the query editor of the main buffer pool reads :A few screenshots

A few more facts

- In order to use the latest InfluxDB version, I had to regenerate the Docker image. Quite easy to do by editing the Dockerfile at https://github.com/tutumcloud/influxdb. EDIT : this has been fixed in the main GitHub repository.

- Same problem for Grafana, the Docker image wasn't up to date. Regenerating the image was also really easy, just had to change the version number in the Dockerfile.

- InfluxDB and Grafana were installed on an EC2 instance, behind a reverse proxy. A riverside-software.fr subdomain was used to redirect the calls to the Docker instance, without any problem

- The statistics are collected on an HP-UX 11.31 box, running OpenEdge 11.3. Twelve databases are monitored, with around 400 concurrent sessions, and the biggest DB is around 170 Gb, with 350 tables and 1200 indexes

- Statistics are triggered from a remote Jenkins server, every 5 minutes, as sending metrics require an Internet connection. This Jenkins server is also running on HP-UX, and depothelper has been used to install curl. It takes approximately 5 to 6 seconds to fetch statistics from the 12 databases.

- Retention policy can be defined in InfluxDB, especially useful if you don't want to keep your data for a long time, and if you don't want to manually clean data (compact job anymore ? :-) )

- Don't expect Grafana to display any kind of chart. Grafana displays time-based series, so no piechart for example (although such a serie could be represented as a piechart, it's still not possible).

- Have fun !

OpenEdge plugin for Jenkins

A simple project to configure OpenEdge versions, install locations on master and slaves, and to define OpenEdge axis in matrix jobs

De retour du PUG Challenge à Bruxelles

Fruit de la collaboration des différents clubs utilisateurs Progress européens, le PUG Challenge s'est tenu cette année à Bruxelles au Radisson Blu Royal Hotel. Plus de 450 participants de 24 pays ont été réunis pour trois jours de sessions (dont une journée complète dédiée aux workshops) sur des sujets tels que l'administration de bases de données, la modernisation d'applications, le développement avec OpenEdge Mobile, l'intégration Corticon, Rollbase, la roadmap des prochains mois, etc...

.jpg) Phil Pead (PDG de Progress) est intervenu avec Karen Padir (Chief Technical Officer) pour présenter leur vision du futur de Progress Software. Et comme à leur habitude, ils sont restés disponibles plusieurs heures pour discuter et échanger les points de vue avec les participants.

Phil Pead (PDG de Progress) est intervenu avec Karen Padir (Chief Technical Officer) pour présenter leur vision du futur de Progress Software. Et comme à leur habitude, ils sont restés disponibles plusieurs heures pour discuter et échanger les points de vue avec les participants.PCT - 10th anniversary

- It started as a side project when I was working for Phenix Engineering, dealing with multiple combinations of the same product, on version 9 and 10, Linux and Windows, and OpenEdge / Oracle / SQL Server databases

- While the first version was released on June 6th, the first commit in the repository is only from July 28th

- The first patch by an external contributor was committed on November 3rd 2003 by Flurix (don't know his real name, or the company)

- The first version included PCTCompile, PCTCreateBase, PCTLibrary, PCTLoadSchema and PCTDumpSchema tasks. No PCTRun at this time.

- The first unit tests were committed on October 26th, 2003 : 7 tests for this first commit

- There are now more than 230 unit tests, multiplied by 7 test platforms, so approximately 1600 test cases for each build

- There was no Hudson, no Jenkins in 2003. PCT was compiled every night on a Pentium (60 MHz or so) sitting under my desk

- As of today, there were 1358 commits (a bit more a commit every 3 days, not a very big project !)

- I included some statistics on PCT usage in September 2009, to know if it was worth working on PCT. I don't remember the exact results, but they were good enough to continue...

- The latest build of PCT (released mid-March) was downloaded 160 times. Not bad for an OpenEdge only dev toolkit.

- I will create a 1.0 (or 10.0 ?) version to celebrate this anniversary

- In the mean time, I released 18 versions from 2003 to 2011...

- And a bit less than 40 continuous integration builds since 2011

- Under the hood, more than 200 builds from the test repository

- PCT started on SourceForge, and migrated to Google Code and Mercurial (unknown date, I'd say in 2009 or 2010)

- The first time PCT was presented in a public conference was the German PUG event in 2009 (along with Padeo, a deployment solution for OpenEdge, using MSI installers)

- Multi-threaded compilations were introduced in 2007 because I needed a test project to play with Java synchronization mechanisms. I never really expected people would use it, and was a bit surprised when the first bug reports came in

- PCT will be a foundation of a brand new source code analysis project, based on Sonar Source. A preview can be seen here

- If you're working with PCT (or not) and would like to improve it : you can test it, report bugs, suggest improvements, provide patches... You can also correct my bad english in the documentation.

As the 1.0 (or 10.0) version will be released during the US PUG Challenge, I'll be happy to share a drink with PCT users and contributors ! And on the good side of the ocean :-) I'll be happy to do the same during the EU PUG Challenge !

Gilles

Next conferences

If you're looking for a way to streamline your OpenEdge application development lifecycle or to improve your code quality, you just have to attend those sessions!

OpenEdge class documentation

A PCT task has been created, to be able to generate the documentation as part of your continuous integration process.

Javadoc-like documentation for OpenEdge classes

This task is based on the implementation of the ABL parser included in OpenEdge Architect, and this first successful attempt of source code parsing offers an extremely large range of possibilities in source code analysis (conformance to coding standards, potential problems, deprecated features, ...).

Be sure to read the requirements for the new ClassDocumentation task, and leave comments or contact us directly !

Webspeed setup on Tomcat

As I'm mainly working with Java, I always have a servlet container ready to run. But the Progress documentation doesn't mention Tomcat as a deployment plaftorm ; here is this guide !

Step 1 : Download Tomcat

Step 2 : create a new webapp

Step 3 : copy static files

Step 4 : create web.xml

<?xml version="1.0" encoding="ISO-8859-1"?>

<web-app xmlns="http://java.sun.com/xml/ns/javaee"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

version="2.5">

<servlet>

<servlet-name>cgi</servlet-name>

<servlet-class>org.apache.catalina.servlets.CGIServlet</servlet-class>

<init-param>

<param-name>debug</param-name>

<param-value>0</param-value>

</init-param>

<init-param>

<param-name>cgiPathPrefix</param-name>

<param-value>WEB-INF/cgi</param-value>

</init-param>

<init-param>

<param-name>executable</param-name>

<param-value></param-value>

</init-param>

<init-param>

<param-name>passShellEnvironment</param-name>

<param-value>true</param-value>

</init-param>

<load-on-startup>4</load-on-startup>

</servlet>

<servlet-mapping>

<servlet-name>cgi</servlet-name>

<url-pattern>/cgi/*</url-pattern>

</servlet-mapping>

</web-app>

Step 5 : privileged application

Create an XML file in $CATALINA_HOME/conf/Catalina/localhost, with the same name as the webapp directory : if you created in step 2 a directory called webspeed110, then create webspeed110.xml.The content of this file is :

<Context privileged="true" />

Step 6 : configure your webspeed broker

This is OpenEdge configuration, and it won't be covered here...Step 7 : play !

Start Tomcat, and go to http://localhost:8080/webspeed110/cgi/cgiip.exe/WService=wsbroker1/workshopThis URL assumes that you're running with the default Webspeed broker wsbroker1.

Bonus step 1 : URL rewriting

<filter>

<filter-name>UrlRewriteFilter</filter-name>

<filter-class>org.tuckey.web.filters.urlrewrite.UrlRewriteFilter</filter-class>

<init-param>

<param-name>confReloadCheckInterval</param-name>

<param-value>0</param-value>

</init-param>

</filter>

<filter-mapping>

<filter-name>UrlRewriteFilter</filter-name>

<url-pattern>/*</url-pattern>

<dispatcher>REQUEST</dispatcher>

<dispatcher>FORWARD</dispatcher>

</filter-mapping>

<?xml version="1.0" encoding="utf-8"?>

<!DOCTYPE urlrewrite PUBLIC "-//tuckey.org//DTD UrlRewrite 3.2//EN"

"http://tuckey.org/res/dtds/urlrewrite3.2.dtd">

<urlrewrite>

<rule>

<from>/cgiip/(.*)</from>

<to>/cgi/cgiip.exe/WService=wsbroker1/$1</to>

</rule>

<!-- default rules included with urlrewrite -->

<rule>

<note>

The rule means that requests to /test/status/ will be redirected to /rewrite-status

the url will be rewritten.

</note>

<from>/test/status/</from>

<to type="redirect">%{context-path}/rewrite-status</to>

</rule>

<outbound-rule>

<note>

The outbound-rule specifies that when response.encodeURL is called (if you are using JSTL c:url)

the url /rewrite-status will be rewritten to /test/status/.

The above rule and this outbound-rule means that end users should never see the

url /rewrite-status only /test/status/ both in thier location bar and in hyperlinks

in your pages.

</note>

<from>/rewrite-status</from>

<to>/test/status/</to>

</outbound-rule>

</urlrewrite>

Bonus step 2 : cgi.bat (and a reminder for myself)

<?xml version="1.0" encoding="ISO-8859-1"?> <web-app xmlns="http://java.sun.com/xml/ns/javaee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd" version="2.5"> <servlet> <servlet-name>cgi</servlet-name> <servlet-class>org.apache.catalina.servlets.CGIServlet</servlet-class> <init-param> <param-name>debug</param-name> <param-value>0</param-value> </init-param> <init-param> <param-name>cgiPathPrefix</param-name> <param-value>WEB-INF/cgi</param-value> </init-param> <init-param> <param-name>executable</param-name> <param-value>c:\windows\system32\cmd.exe</param-value> </init-param> <init-param> <param-name>executable-arg-1</param-name> <param-value>/c</param-value> </init-param> <init-param> <param-name>passShellEnvironment</param-name> <param-value>true</param-value> </init-param> <load-on-startup>4</load-on-startup> </servlet> <servlet-mapping> <servlet-name>cgi</servlet-name> <url-pattern>/cgi/*</url-pattern> </servlet-mapping> </web-app>

@echo off set DLC=C:\Progress\OPENED~1.5 set PROMSGS=%DLC%\promsgs set WRKDIR=%TEMP% REM 3055 is default port of wsbroker1 %DLC%\bin\cgiip.exe localhost 3055

.jpg)